Evolution of AI hardware

Artificial intelligence (AI) models are continually evolving over time. But, to be efficient, AI needs adapted hardware to perform operations. As a result, hardware also needs to evolve to be able to follow the growth of AI. This article reviews the evolution of AI, marked by three waves, before focusing on their respective hardwares.

Three waves of AI

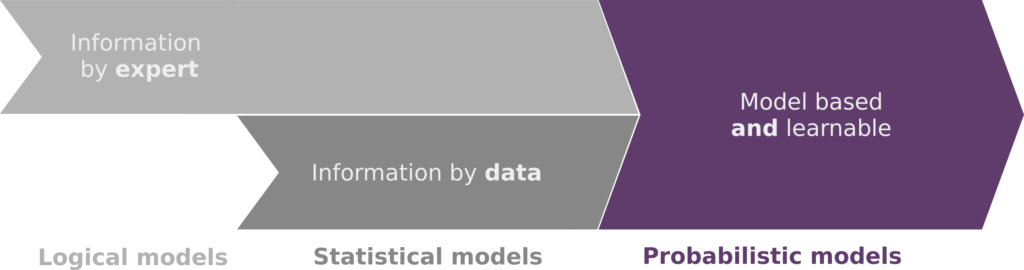

From the outset, AI has been divided into two categories. On one side, symbolic AI, characterized by symbols and logic. On the other side, connectionist AI, favoring emergence inside networks and interactions. For 70 years, these two categories had their hour of glory, each exhibiting its advantages and addressing the shortcomings of the other.

Today, times have changed. In order to create a stronger and more efficient AI, both categories need to collaborate. It is time to create a third category, combining strengths of symbolic and connectionist AI. We present this third category through lenses of probabilistic AI.

Symbolic AI – Hour of glory: 1950 – 1990

Symbolic AI is based on rules and on high level representations of knowledge. It uses logic and reasoning to make decisions. Mostly used in the twenty century, Symbolic AI is also called the classic AI or the Good Old-Fashioned Artificial Intelligence (GOFAI).

Symbolic AI merges several AI families, the most well-known examples being expert systems which model the reasoning of a human expert of a given field. It was commonly used in medicine and finance.

Very controllable, Symbolic AI is clear and unambiguous. Solutions found are explainable and it is possible to understand the logic of the reasoning that led to each decision. In return, Symbolic AI is rigid and needs a large upstream work to define all necessary representations and rules. Decisions are limited to the defined problem. This AI does not support any generalization, exception, analogy or possibilities outside of its scope.

Connectionist AI – Hour of glory: 2010 – today

Connectionist AI is based on interaction of small units connected to one another from which can emerge some phenomena. It is inspired from the cerebral connections of the brain. The development of Connectionist AI has been accelerated with the arrival of artificial neural networks (ANN) followed by deep neural networks (DNN) a few years later.

This kind of AI requires a lot of data to perform well. Data are used during a training phase to consolidate the parameters of the model and to learn statistical trends or appropriate associations. Widely used in classification or discrimination tasks, Connectionist AI is everywhere nowadays thanks to the emergence of big data. It can be found in various fields such as natural language processing and vision tasks. However, the needs for large amount of information limits their adoption in domains that do not have enough data.

It also requires a lot of resources. Generally executed in data-centers and accessible from cloud platforms, they are high consumption AI. In a world where resources are becoming scarce, AI have to strive for frugality.

Another current problem is the inexplicability of Connectionist AI. Grounded in a huge amount of data, there is no visibility about the hidden meaning behind learned parameters. Consequently, decisions taken are not easy to understand. Models look like a black box transforming input into output without real understanding and explanations of the produced output.

Probabilistic AI – Hour of glory: Today and tomorrow

Probabilistic AI is based on probability and a high-level representation of the world. Setting up during a training phase like Connectionist AI, it makes decisions during an inference phase based on probabilistic reasoning.

Combining structured representations used by Symbolic AI and data learning used by Connectionist AI, it gains competitive advantages compared to other AIs:

- Thanks to its initial structure, Probabilistic AI can learn with fewer data. They are more energy efficient and require less time to perform well.

- By its nature, it can be as precise as symbolic AI but is able to generalize and uses statistical trends like Connectionist AI

- It is explainable. The inference phase used in probabilistic AI manipulates dedicated operations which can be decomposable

- It is more easily multitasking than other AIs as several questions can be asked to the model. Other AIs are more generally built to respond to one specific task.

Probabilistic AI are very efficient models but current hardware is not adapted to perform some probabilistic operations used during the inference phase in a competitive way.

Evolution of AI Hardware

CPU

The Central Processing Unit (CPU), also called processor, is a chip that executes instructions on the device. It tells each component what to do according to instructions it receives from software programs. With its Central Unit (CU) and its Arithmetic-Logic Unit (ALU), two main

components of the Van Neumann architecture, it performs very well arithmetic and logic operations. Its appearance facilitates the usage of symbolic AI. It can also run simple Connectionist AI models like Recurrent Neural Networks (RNN).

GPU

The Graphics Processing Unit (GPU) is a chip dedicated to graphics and image processing. Specifically designed for parallel processing, it breaks complex instructions into very small tasks and performs them at once. Its creation considerably accelerated image and video rendering and, by extension, multiples other applications such as AI models. It facilitates the usage of machine learning applications, especially incomputer vision.

DPU

The Data Processing Unit (DPU) is, as the name suggests, a chip proficient in data processing. Designed for applications with largescale data-processing needs, they are present in data centers or supercomputers. Their high-performance are due to the combination of three mains components : a multi-core CPU, a network interface and a set of acceleration engines. Their capacity to manipulate a large amount of data makes them particularly efficient for deep learning models requiring considerable training data.

CPUs, GPUs and DPUs are not AI-centric. They execute AI models and enhance the creation of more powerful and efficient AI engines but, at their origin, they are not dedicated to AI applications. CPUs were created to easily perform computer programs, GPUs were created to accelerate graphics computations and DPUs were created to handle big data. That’s why, recently, dedicated units for AI applications emerged: AI accelerators.

In the family of AI accelerators, the most famous processors are Neural Processing Units (NPU), also called neural processors. They are processors specifically designed for the acceleration of machine learning algorithms. At first, there were generally two types of NPUs : the former accelerated the training, the latter the inference process. Nowadays, accelerators are able to do both operations but are generally independently done.

DLP

One popular example of NPUs is the Deep Learning Processor (DLP) which is a processor optimized for deep learning algorithms. One famous example of DLPs is the Tensor Processing Unit (TPU) which is an AI accelerator Application-Specific Integrated Circuit (ASIC) developed by Google, for people using Google’s own TensorFlow software.

Another example of NPU is the Intelligence Processing Unit (IPU) developed by Graphcore. It is based on a highly parallel, memory-centric architecture, completely different from CPUs or GPus. It uses MIMD (Multiple Instruction, Multiple Data) parallelism and its memory is local and

distributed.

NPUs are general AI accelerator that can be used for every application involving neural processing. It also exists AI processors dedicated for specific AI applications such as the Vision Processing Unit (VPU) which is dedicated to machine vision applications. Its low energy and data consumption, compared to GPUs, is one of its main advantages.

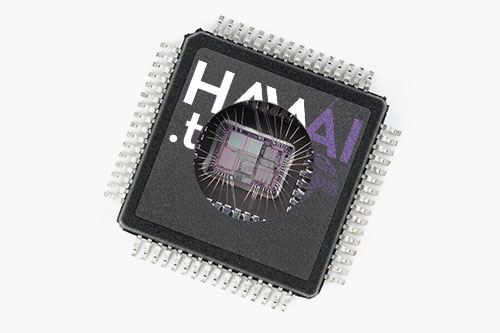

Most AI accelerators presented here are dedicated to the second category of AI : Connectionist AI. But, the new wave, combining Symbolic and Connectionist mechanism also requires an appropriate processor to performs well. The goal of HawAI.tech is to create a dedicated hardware for probabilistic AI : the Bayesian Probabilistic Processing Unit (B2PU).

B2PU

The Bayesian Probabilistic processing unit are dedicated accelerator for probabilistic inference. They will better manipulate probability distributions and will enhance the global performance of probabilistic AI in terms of time and energy. Combining efficiency and frugality in an unique chip, it will be particularly appropriate to meet the needs of AI at the edge.

Future is coming

This article highlights the presence of dedicated hardwares to respond to the needs of AI in terms of performance and computation-speed. As a new wave of AI is coming, there is a significant need of a dedicated hardware to handle its specificity to plainly exploit its performance. The B2PU from HawAI.tech will be this hardware, specifically designed for Probabilistic AI.